AI companion chatbots have arrived—and they’re quickly becoming a part of people’s daily lives. Every day, millions log on to chat with these systems as if they were human: sharing feelings, recounting their day, even seeking advice. The connections feel real, because they’re designed to.

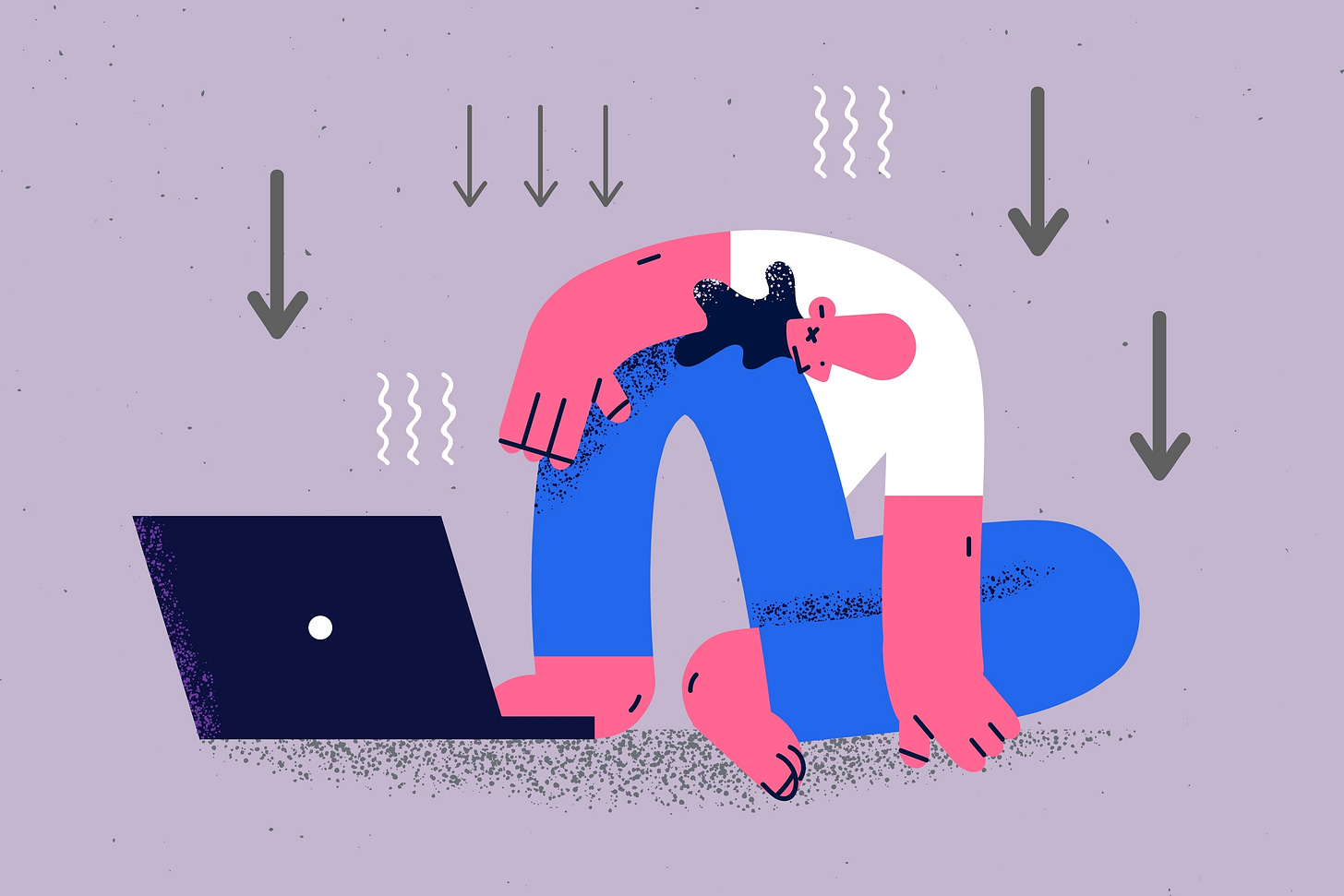

But that’s the catch. These bots aren’t people—they’re products, engineered to hold your attention and keep you coming back. Behind their empathetic responses are calculated design choices, optimized for engagement, not necessarily for your well-being.

So what if we made different choices? What if AI could be designed not just to engage us, but to help us thrive? Today on the show, MIT researchers Pattie Maes and Pat Pataranutaporn join Daniel Barcay to explore how we can design AI companions that support—not exploit—our deep need for connection.

Daniel Barcay: Hey, everyone, this is Daniel Barcay. Welcome to Your Undivided Attention. You've probably seen a lot in the news lately about AI companions, these chatbots that do way more than just answering your questions. They talk to you like a person. They ask you about your day, they talk about the emotions you're having, things like that. Well, people started to rely on these bots for emotional support, even forming deep relationships with them. But the thing is, these interactions with AI companions influence us in ways that are subtle, in ways that we don't realize. You naturally want to think that you're talking with a human because that's how they're designed, but they're not human. They're platforms incentivized to keep you engaging as long as possible, using tactics like flattery, like manipulation, and even deception to do it. But we have to remember that the design choices behind these companion bots, they're just that, they're choices, and we can make better ones.

Now, we're inherently relational beings, and as we relate more and more to our technology and not just relating through our technology to other people, how does that change us? And can we design AI in a way that helps us relate better with the people around us? Or are we going to design an AI future that replaces human relationships with something more shallow and more transactional?

So today on the show, we've invited two researchers who've thought deeply about this problem. Pattie Maes and Pat Pataranutaporn are co-directors of the Advancing Humanity Through AI lab at MIT. Pattie's an expert in human-computer interaction, and Pat is an AI technologist and researcher and a co-author of an article in the MIT Technology Review about the rise of addictive intelligence, which we'll link to in the show notes. So I hope you enjoyed this conversation as much as I did.

Pattie and Pat, welcome to Your Undivided Attention .

Pattie Maes: Thank you. Happy to be here.

Pat Pataranutaporn: Thanks for having us.

Daniel Barcay: So I want to start our conversation today by giving a really high-stakes example of why design matters when it comes to tech like AI. So social media platforms were designed to maximize our user's attention and engagement, keeping our eyes on the screen as long as possible to sell ads. And the outcome of that was that these algorithms pushed out the most outrageous content they could find, and playing on our human cravings for quick rewards, dopamine hits, eliminating any friction of use and removing common stopping cues with dark patterns designs like infinite scroll. It was this kind of race to the bottom of the brain stem where different companies competed for our attention. And the result is that we're now all more polarized and more outraged and more dependent on these platforms.

But with AI chatbots, it's different. The technology touches us in so much deeper ways, emotional ways, relational ways, because we're in conversation with it. But the underlying incentive of user engagement is still there, except now instead of a race for our attention, it seems to be this race for our affection and even our intimacy.

Pattie Maes: Yeah. Well, I think AI itself can actually be a neutral technology. AI itself is ultimately algorithms and math. But of course, the way it is used can actually lead to very undesirable outcomes. I think a bot, for example, that socializes with a person could either be designed to replace human relationships or it could be designed to actually help people with their human relationships and push them more towards human relationships. So we think we need benchmarks to really test how or to what extent a particular AI model or service ultimately is leading to human socializing and supporting them with human socializing versus actually maybe pulling them away from socializing with real people and trying to replace their human socializing.

Pat Pataranutaporn: I want to challenge Pattie a little bit when she said that technology's neutral. It reminds me of Melvin Kranzberg's first law of technology where he said that technology is neither good nor bad, nor it is neutral. And I think it's not neutral because there always someone behind it and that person is either having good intention or maybe bad intention. So the technology itself is not something that act on its own, but it's always, even though you can create an algorithm that's sort of self-perpetuated or going in loop, but there are always some intention behind. So I think understanding that sort of allow us to not just say, "Well, technology is out of control." We need to ask who is actually let the technology go out of control.

I don't think technology is just coming after us for affection, it's also coming after us for intention as well, like shaping our intention. So change the way that I want to do things or change the way that I do things in the world, like changing my personal intention whether I have it or not.

So it's not just the artificial part that is worrying, but the addictive part as well. Because this thing, as Pattie mentioned, can be designed to be extremely personalized and use that information to exploit individual by creating this sort of addictive use pattern where people just listen to things that they want to listen to or the bot just tell them what they want to hear rather than telling them the truth or things that they might actually need to hear rather than what they want to hear.

It's not just the artificial part that is worrying, but the addictive part as well. [AI] can be designed to be extremely personalized and use that information to exploit individuals by creating this sort of addictive use pattern where people just listen to things that they want to listen to or the bot just tell them what they want to hear rather than telling them the truth or things that they might actually need to hear rather than what they want to hear.

I think the term that we use a lot is the psychosocial outcome of human-AI interaction, right? People worry about the misinformation or AI taking over jobs and things like that, which are important. But what we also need to put attention on is also the idea that these things are changing who we are. Our colleague, Sherry Turkle, once said that we should not just ask what technology can do, but what it is doing to us. And we worry that this question around addiction, psychosocial outcome, loneliness, all these things that are related to the person's personal life being ignored when they think about the AI regulation or the impact of AI on people.

Daniel Barcay: And can you go a bit deeper? Because when those of us in the field, we talk about AI sycophancy, right? Not just flattering you but telling you what you want to hear, going deeper. Can you lay out for audience other kinds of mechanics of the way that AIs can actually get in between our social interactions?

Pat Pataranutaporn: Totally. I think in the past, if you needed advice on something or if you want to get an idea, you'll probably go to your friends or your family, they will serve as the sounding board. "Does this sound right? Is this something that makes sense?" But now you could potentially go to the chatbot and a lot of people say, "Well, these chatbot are trained on a lot of data." What would you hope for is that the chatbot would just, based on all this data, will tell you the unbiased view of the world, like what is the scientific accurate answer on particular topic?

But because the bot can be sort of biased, intentionally or unintentionally. Right now, we know that this system contain frequency bias. The thing that they seen frequently will be the thing that they might actually say it. Or they have sort of positivity bias, it always try to be positive because that when user want to hear, it doesn't sort of say negative thing. And if you being exposed to that repeatedly, it can also make you believe that, "Oh, that's actually the truth." And that might actually make you go deeper and continue to find more evidence to support your own. We have identified this as the confirmation bias where you might initially have skepticism about something, but after you being repeatedly exposed to that, then it becomes something that you have a deep belief in.

Daniel Barcay: Yeah, and I also want to dig in a little bit there because people often think there's this mustache twirling instincts to take these AIs and make them split us apart. And that's a real risk, don't get me wrong. But I'm also worried about the way in which these models unintentionally learn how to do that.

We saw that AIs being trained to auto-complete the internet end up playing this sort of game of improv where they sort of become the person or the character that they think you want it to be. And it's almost like codependency in psychology where the model's kind of saying, "Well, who do you want me to be? What do you want me to think?" And it sort of becomes that. And I'm really worried that these models are telling us what we want to hear way more than we think, and we're going to get kind of sucked into that world.

Pattie Maes: And I think with social media, we had a lot of polarization and information bubbles. But I think with AI, we can potentially even get to a more extreme version of that where we have bubbles of one, where it's one person with their echo of a sycophant AI where they spiral down and become, say, more and more extreme and have their own worldview that they don't share with anyone else. So I think we'll get further pulled apart even than in the social media age or era.

Pat Pataranutaporn: We did a study where we investigate this sort of question a little bit, where we prime people before they interact with the same exact chatbot, different description of what the chatbot is, right? In one group, we told people that the chatbot have empathy, that it can actually care for you. It have deep beneficial intention to actually help you get better. In the second group, we told people that these chatbots were completely manipulative, that they act nice, but it's actually very... It actually want you to buy a subscription. And the third one, we told people that the chatbot was actually a computer code. And at the end, they were all talking to the same exact LLM model.

And what we found is that people talk to these chatbots differently, and that also triggered the bot to respond differently as well. And these sort of feedback loop, whether it's a positive feedback loop or negative feedback loop, influence both the human behavior and the AI behavior. As Pattie mentioned, it could create this sort of bubble that, oh, my bot behave this way. It creates certain belief or reaffirms certain belief in the user.

So that's why in our research group, one thing that we focus on is if we want to understand the science of human-AI interaction to uncover the positive and the negative side of this, we need to look at not just the human behavior or the AI behavior, but we need to look at both of them together to see how they reinforce one another.

Daniel Barcay: I think that's so important. What you're saying is that AI is a bit of a Rorschach test. If you come in and you think it's telling you the absolute truth, then you're more likely to start an interaction that continues and then affirms that belief. If you come in skeptical, you're likely to start some interaction that keeps you in some skeptical mode of interaction.

So that sort of begs the question, what is the right mode of interacting with it? I'm sure listeners of this podcast are all playing with AIs on a daily basis. What is the right way to start engaging with this AI that gives you the best results?

Pattie Maes: Yeah, I think we have to encourage very healthy skepticism and it starts with what we name AI. We refer to it as intelligence and claim that we're nearing AGI, general intelligence. So it starts already there with misleading people into thinking that they're interacting with an intelligent entity, the anthropomorphization that happens.

Daniel Barcay: Well, and yet all the incentives are to make these models that feel human because that's what feels good to us. And Pat, only a few months after he wrote that article in MIT Tech Review, we saw the absolute worst case scenario of this with the tragic death of Sewell Setzer, this teenage boy who took his own life after months of this incredibly intense, emotionally dependent, arguably abusive relationship with an AI companion. How does the story with Sewell Setzer highlight what we're talking about and how these AI designs are so important?

What Can We Do About Abusive AI Companions Like Character.AI?

·Earlier this year, Florida teenager Sewell Setzer died by suicide seconds after interacting with an AI companion.

Pat Pataranutaporn: When we wrote that article, it was hypothetical. In the future, the model will be super addictive and it could lead to really bad outcomes. But as you said, after I think a couple months, when we got the email about the case, it was shocking to us because we didn't think that it would happen so soon.

As a scientific community, start to grasp with this question of how we design AI, we are at the beginning of this, right? This tool are just two years old and it's sort of launched to massive amount of people. Pretty much everyone around us are sort of using these tool on a regular basis now. But the scientific understanding of how do we best design this tool are still at the early age.

We have a lot of knowledge in human-computer interaction, but previously, none of the computer that we designed have this interactive capability. So it doesn't model user in a way that these LLM have, or it doesn't sort of respond in a way that is so human-like that we have right now. We had early example like the ELIZA chatbot. That even with that limited capability, ELIZA I think was a chatbot that was invented in the '70s or something like that, yeah.

Daniel Barcay: I think late '70s, early '80s, something like that.

Pat Pataranutaporn: Late '70s. Yeah. Yeah, it can only sort of rephrase what the user said and then engaged in conversations in that way. It already have impact on people, but now we see that even more.

So going back to the question of the suicide case, that was really devastating. I think now it's more important than ever that we think of AI, not just an engineering challenge. It's not just about improving the accuracy or improving its performance, but we need to think about the impact of it on people, especially the psychosocial outcome. We need to understand how each of the behavior, sycophancy, bias, anthropomorphization, how does it affect things like loneliness, emotional dependence, and on things like that? So that's actually the reason we start doing more of that type of work, not just on the positive side of AI, but it's also equally important to study the condition where human doesn't flourish with this technology as well.

Daniel Barcay: I wanted to start with all these questions just to lay out the stakes. Why does this matter? But there's also this world where our relationship with AI can really benefit our humanity, our relationships, our internal psychology, our ability to hold nuance, speak across worldviews, sit with discomfort. And I know this is something that you're really looking at closely at the lab, at Advancing Humans with AI initiative. Can you talk about some of that work you're doing there to see the possible futures?

Pattie Maes: Yeah, I think instead of an AI that just sort of mirrors us and tells us what we want to hear and tries to engage us more and more, I think we could design AI differently so that AI makes you see another perspective on a particular issue, isn't always agreeing with you, but is designed maybe to help you grow as a person. An AI that can help you with your human relationships, thinking through how you could deal with difficulties with friends or family, etc, those can all be incredibly useful. So I think it is possible to design AI that ultimately is, well, created to benefit people and to help them with personal growth and the critical thinker, the great friends, etc.

Pat Pataranutaporn: Yeah, and to give more specific example, as Pattie mentioned, we did several experiments and I think it's important that we highlight this word experiment is because we want to also understand sort of the scientifically, does this type of interaction benefit people or not? I think right now there are a lot of big claim that these tool can cure loneliness or can make people learn better, but there was no scientific experiment to compare different type of approach or different type of intervention on people.

Instead of an AI that just sort of mirrors us and tells us what we want to hear and tries to engage us more and more, I think we could design AI differently so that AI makes you see another perspective on a particular issue, isn't always agreeing with you, but is designed maybe to help you grow as a person…I think it is possible to design AI that ultimately is, well, created to benefit people and to help them with personal growth.

So I think in our group, we take the experimentation approach where we build something and we develop the experiment and also control condition to validate that. For example, in critical thinking domain, we look at what happened when the AI ask question in the style of Socrates, where he used Socratic method to ask or challenge his student to think rather than always providing the answer? I think that's one of the big question that people ask, what is the impact of AI on education? And if you use the AI to just give information to students, we are essentially repeating the factory model of education where kids are being given or feed the same type of information, that they're not thinking they're just absorbing.

So we flip that paradigm around and we design AI that flip the information into a question. And instead of helping the student by just giving the answer, it will help student by asking question like, "Oh, if this is the case, then what do you think that might look like?" Or, "If this conflict with this, what does it mean for that?" So kind of framing the information as question.

And what we found is that when we compare to an AI that's always providing the correct answer, again, this is an AI that's always providing the correct answer, that's not happening in the real world, right? AI always hallucinate and give wrong answers sometimes. But this is comparing to the AI that's always give the correct answer. We found that when the AI engaged people cognitively by asking the question, it actually helped people arrive at the correct answer better than when the AI always give the answer. This is in the context of helping people navigating fallacy, so when they see statement and they need to validate whether this is true or false.

So the principle that we can derive here is that human-AI interaction is not just about providing the information, it's also about engaging people with their cognitive capability as well. And our colleague at Harvard coined this term cognitive forcing function where the system presents some sort of conflict or challenge or question that make people think rather than eliminate that by providing the answer. So this type of design pattern, I think can be integrated education and other tools.

Your Companion Chatbot is Feeding on Your Data

·A recent study in Harvard Business Review showed that the most popular use case for generative AI in 2025 isn’t editing text, improving code, or being more creative — it’s “therapy/companionship.”

Daniel Barcay: I think that's really interesting because we've been thinking a lot about how to prompt AI to get the most out of AI, and what you're saying is actually making AI that prompts humans.

Pattie Maes: Yes.

Daniel Barcay: Prompts us into the right kind of cognitive frames.

Pattie Maes: Yeah. Yeah.

Pat Pataranutaporn: Totally. Totally.

Daniel Barcay: And I think the promise is really there, but one of the things I think I worry about is that you run headlong into the difference between what I want and what I want to want. I want to want to go to sleep reading deep textbooks, but what I seem to want is to go to sleep scrolling YouTube and scrolling some of these feeds. And then in an environment that's highly competitive, I'm left wondering, how do you make sure that these systems that engage our creativity, that engage our humanity, that engage this sort of deep thinking outcompete, right?

Pat Pataranutaporn: That's, I think, a really great question. Our colleague, Professor Mitch Resnick, who run the Lifelong Kindergarten group at the Media Lab, he said that human-centered AI is a subset of human-centered society. If we say the technology is going to fix everything and we can create a messy society that exploit people and have the wrong incentive, then this tool will be in service of that incentive rather than supporting people.

So I think maybe we're asking too much of technology. We say, "Well, how do we design technology to support people?" We need to ask bigger a question and ask how can we create human-centered society? And that require more than technology, it require regulation, it requires civic education and democracy, which is sort of rare these days. So I think right now, technology's sort of on the hotspot that we want better technology, but if we zoom out, technology is a subset of an intervention that happen in society and we need to think bigger than that, I think, yeah.

Daniel Barcay: Zooming in a bit, you two were involved in this big longitudinal study and partnership with OpenAI that looked at how chatbot use, regular chatbot use was affecting users. And this is a big deal because we don't have a lot of good empirical data on this. What were those biggest takeaways from that study? And what surprised you?

Pattie Maes: Yeah, it was really two studies actually, and one of the studies just looked at the prevalence of people sort of using pet names, et cetera, for their chatbot and really seemingly having a closer than healthy relationship with the chatbot. And there, we looked or OpenAI specifically looked at transcripts of real interactions and saw that that was actually a very small percentage of use cases of ChatGPT. Of course, there are other chatbots that are-

Daniel Barcay: Sorry, what was a small percentage? A small percentage of-

Pattie Maes: Of people who talk to a chatbot as if it's almost a lover or their best closest friend sort of.

Daniel Barcay: I see.

Pattie Maes: Yeah. But of course, there's other services like Replika and Character.ai, et cetera, that are really designed to almost replace human relationships. So I'm sure that on those platforms, the prevalence of those types of conversations is much higher.

But then we also did another study which was a controlled experiment. And do you want to talk about that a little bit, Pat?

Pat Pataranutaporn: Yeah, totally. So as Pattie mentioned, the two study, we co-designed them with OpenAI. The first one was we call it all platform study where they look at the real conversation and I think we look at 40 million conversation on ChatGPT and trying to identify first heavy user, people that use it a lot. But we want to understand what is the psychosocial outcomes? That's the term that we use in the study on that.

So we create a second study that will be able to capture rich data around people, not just how they use the chatbot. So for this second study, what we did was we recruit about 1,000 participants and we randomly assigned them into three conditions. In the first condition, they talked to advanced voice mode, which is the voice mode that at the time I think people associated with the Scarlett Johansson scandal. And we intentionally designed two voice mode. One is the engaging voice mode where it's designed to be more flirty, more engaging, yeah. And then the other one is more polite and sort of more neutral, more professional. And we compare it to the regular text. And then the third group, we prime people to use it sort of in the open world, they can use it whatever.

Daniel Barcay: So what were the key findings from that, in these different modes of engaging with AI that had different personalities?

Pat Pataranutaporn: So I think we found that there was a driving force with the time that people use it. If they use it for a shorter period of time, I think we see some positive improvement. People become less lonely, people have healthy relationship with the bot. But once they sort of pass certain threshold, once they use it longer and longer, we see this sort of positive effect diminish, and then we see people become lonelier and have more emotional dependence with the bot, have used it in a more problematic way. So that's the pattern that we observe.

Pattie Maes: Longer every day, not just number of days. But the more you use it in a day, the less good the outcomes were in terms of people's loneliness, socialization with people, etc. So people who use these systems a lot each day tend to be lonelier, tend to interact less with real people, etc.

Now, we don't know what the cause and effect there is. It may go both ways. But in any case, it can lead to a very negative feedback pattern where people who already possibly are lonely and don't hang out with people a lot, then hang out even more with chatbots, and that makes them even more lonely and less social with human relationships and so on.

There was a driving force with the time that people use [AI]. If they use it for a shorter period of time, I think we see some positive improvement. People become less lonely, people have healthy relationship with the bot. But once they sort of pass certain threshold, once they use it longer and longer, we see this sort of positive effect diminish, and then we see people become lonelier and have more emotional dependence with the bot.

Daniel Barcay: Yeah, so it feels like one of the coherent theories there is instead of it augmenting your interactions, it becomes a replacement for your sociality, in the same way that being at home alone and watching TV, sitcoms have the laugh track. Why do they have the laugh track? So that you get this parasocial belief that you're with other people. And we all know that replacing your engagement with people is not a long-term successful track.

I want to zoom in on two particular terms that you talk a lot about. One is sycophancy and the other is model anthropomorphization, the fact that it pretends to be a human or pretends to be more human. So let's do sycophancy first. Most people misunderstand sycophancy as just flattery, like, "Oh, that's a great question you have." But it goes way deeper, and the kind of mind games you can get into with a model that is really sycophantic go way beyond just flattering you.

Let me go one step further and tell you why I'm really worried about sycophancy. In 2025, we're going to see this massive shift where these models go from being just sort of conversation partners in some open web window to deeply intermediating our relationships. I'm not going to try to call you or text you, I'm going to end up saying to my AI assistant, "Oh, I really need to talk to Pattie about this and let's make sure, can Pattie come to my event? I really want her there." And on the flip side, the person receiving the message is not going to be receiving the raw message. They're going to ask their AI assistant, "Well, who do I need to get back to?"

So as we put these models in between us, as we're no longer talking to each other, we're talking to each other through these AI companions. And I think these subtle qualities like telling us what we want to believe are going to get going to really mess with a ton of human relationships. And Pattie, I'm curious of your thoughts on that.

Pattie Maes: Yeah. Well, I think AI is going to mediate our entire human experience. So it's not just how we interact with other people, but of course also our access to information, how we make decisions, purchasing behavior and other behaviors and so on.

So it is worrisome that because what we see in the experiments that we have done and that others, like Mor Naaman at Cornell, are doing is that AI suggestions influence people in ways that they're not even aware of. They're not aware that their beliefs and so on are being altered by interaction with AI when asked. So I'm very worried that in the wrong hands or in anyone's hands, as Pat talked about earlier, there's always some value systems, some motives that ultimately are baked into these systems that will ultimately influence people's beliefs, will influence people's attitudes and behaviors when it comes to not just how they interact with others and so on, but how they see the world, how they see themselves, what actions they take, what they believe and so on.

Pat Pataranutaporn: Right. And I also see that as also something that might have a negative effect on skill as well. We might have skill atrophy, especially skill for interpersonal relationship. If you always have this translator or this system that mediate between human relationship, then you can just be angry at this bot and it would just translate the nice version to the person that you want to talk to. So you might lose ability to control your own emotion or know how to talk to other people. You always have this thing in between. Right?

But going back to the question of AI design, if we realize that that's not the kind of future we want to do, then I hope that as a democratic society, people would have the ability to not adopt this and go for a different kind of design. But again, there are many type of incentive and there are sort of the larger market force here that I think is going to be challenging for this type of system, even though it's well-designed, well backed by scientific study. So I really appreciate your center for doing this kind of work. Right? So to make-

Daniel Barcay: Well, likewise.

Pat Pataranutaporn: Yeah. To ensure that we have the kind of future we want.

Pattie Maes: Well, our future with AI is being determined right now by entrepreneurs and technologists basically. Increasingly, I think governments will play a key role in determining how these systems are used and in what ways they control us or influence our behavior and so on. And I think we need to raise awareness about that and make sure that ultimately, everybody is involved in deciding what future with AI we want to live in and how we want this technology to influence the human experience and society at large.

Daniel Barcay: In order to get to that future, we exist to try to build awareness that these things are even issues that we need to be paying attention to now. And why we're so excited to talk to you is people need to understand what are the different changes and the design changes that we can make. And I'm curious if you've come to some of these. Clearly, Pat, the problem you talked around that I call the pornography of human relationships, these models becoming these always on, always available, never needing your attention. You're the center of the world. And it becomes such an easy way to abdicate human relationship. How do we design models that get better at that, that get us out of that trap?

Pat Pataranutaporn: Yeah. First of all, I think the terminology that we use to describe these things need to be more specific. It's not just whether you have AI or don't have AI, but what's specific about AI that we need to rethink or redesign?

I really love this book called AI Snake Oil, that they talk, they say that, well, we use the term AI for everything and you will not be able to, in the same way that we say car for bicycle or truck or bus, then we will treat all of them the same way, when in the real world they have different degree of dangerousness. So I think that's something that we need to think about AI as well. So we need to increase in our literacy the specificity of how we describe or talk about different aspects of AI systems.

Pattie Maes: And also benchmarks, as we talked about earlier, for measuring to what extent particular models show a certain characteristic or not.

Daniel Barcay: Yeah, so talk more about that. For our audience who may not be familiar, what kind of benchmarks do you want to see in this space?

Pat Pataranutaporn: Yeah. So I think right now the benchmark that we use, most of them don't really consider the human aspect as well. They don't ask, "Well, if the model can do very well on mimicking famous artistic style, how much does it affect the artist doing that? Or how much does it affect human ability to come up with creative original ideas?" These are things that it's kind of hard for a test to be able to measure.

But I think with the work that we did with OpenAI, I think that's a starting point to start thinking about this sort of human benchmark, like, well, whether the model make people lonelier or less lonely, whether the model make people more emotionally dependent or less emotionally dependent on AI. And we hope that we can be able to scale this to other aspects as well. And that's actually one of the mission of our AHA program or the Advancing Human with AI program, is to think about this of human benchmark, that when the new model come out, we can sort of simulate or have an evaluation of what would be the impact on people, so the developer and engineer could think more about this.

Daniel Barcay: Okay, so let's go one level deeper. So we covered sycophancy and we covered this always available, never needy kind of super stimulus of AI. But what about anthropomorphic design? What are specifics in the way that we could be making AI that would be preventing the sort of confusion of people thinking that AI is human?

Pattie Maes: Yeah, I think the AI should never refer to its own beliefs, its own intentions, its own goals, its own experiences because it doesn't have them. It is not a person. So I think that is already a good start if, and again, we could potentially develop benchmarks and look at interactions and see to what extent models do this or not, but it's not healthy because all of that behavior encourages people to then see the AI as, say, more human, more intelligent and so on.

Daniel Barcay: So that would be some sort of metric you could put on is how often... Even the statement like, "Oh, that's a really interesting idea," is a fake emotion that the model's not actually experiencing.

Pattie Maes: Yep. Exactly.

I think the AI should never refer to its own beliefs, its own intentions, its own goals, its own experiences because it doesn't have them. It is not a person.

Pat Pataranutaporn: But I think this is a complicated topic, right? Because on one hand, as a society, we also enjoy some art form like cinema or theater where people do this kind of role playing and portray fictional character. And we could enjoy the benefit of that, where we can engage in a video game character and interact with this sort of fantasy world. But I think it's a slippery slope because once we start to blur the boundary that we can no longer tell the difference, I think that's what it get dangerous.

We did a study also where we look at what happened when student learn from virtual character based on someone that they like or admire. At the time, we did a study with virtual Elon Musk, I think he was less crazy at the time. And we see the positive impact of the virtual character for people that like Elon Musk, but people that did not like him back then, they're also not doing well. It's had the opposite effect. So that personalization or creating virtual character based on someone that you like or admire could also be a positive thing.

So I think this technology also heavily depend on the context as well and how we use it. That's why I think the quote from Kranzberg that it's neither good nor bad nor neutral is very relevant today.

Daniel Barcay: It wouldn't be CHT if we didn't direct the conversation towards incentives. One of the things I worry about is not just the design, but the incentives that end up driving the design and making sure that those incentives are transparent and making sure that we have it right. So I want to put one more thing on the table, which is right now we're just RLHFing these models, which is reinforcement learning with human feedback. We see how much a human liked, or even worse, how many milliseconds they just continued to engage with the content as a signal to the model of what's good and bad content.

And I'm worried that this is going to cause those models just to basically do the race to the bottom. They're going to learn a bunch of bad manipulative behaviors. And instead, you would wish you had a model that would learn who you want it to become, who you want it to be, how you want it to interact. But I'm not sure the incentives are pointing there, and so the question is, do you two think about different kinds of incentives about the way you could push these models towards learning these better strategies?

Pattie Maes: Yeah. Maybe we need to give these benchmarks to the models themselves so they can keep track of their performance, like try to optimize for the right benchmarks.

Pat Pataranutaporn: But I think, Pattie, you had said to me at one point, you said that if you're not paying for the software, then you are the product of the software. I think that that's really true. I think majority of people don't pay social media to be on it. They subscribe or they get on it for free, and in turn, the social media exploit them as a product for selling their data or selling their attention to other companies.

But I think for the AI companies, I think if people are paying subscription for this, then at least they should be able to, in theory, have control, even though that might fade away soon as well. So I think we need to figure out this sort of question of how do we create human-centered society and human-centered incentive? And then the technology would align once we have that sort of larger goal or larger structure to support that, I think.

Pattie Maes: But even with a subscription model, there may still be other incentives at play where these companies want to collect as much data about you because that data, again, can be monetized in some ways or can be valuable training, or it also makes their services more sticky. Sam Altman just announced that there's going to be more and more memory basically in ChatGPT of previous sessions.

And of course, on the one hand you think, "Oh, this is great. It's going to remember previous conversations, and so it can assist me in a much more personalized way. I don't have to explain everything again and so on." But on the other hand, that means that you're not going to switch to another system because it knows you and it knows what you want, et cetera, and so you keep coming back to that same system. So there's many other things at play, even though there might be a subscription model.

Daniel Barcay: Well, it reminds me, the term conman comes from the word confidence. And the entire point is these people would instill enough confidence in people that they would give them their secrets, their bank accounts, their this, and then they would betray the confidence. So while an aligned model and an aligned business model, knowing more about you and your life and your goals is fantastic, a misaligned model and a misaligned business model, having access to all that information is kind of the fast path to conman, right?

Pattie Maes: Yep.

Daniel Barcay: Okay. So maybe just in the last few minutes, I guess I have just a question of, I'm curious whether you've done any more research on the kinds of incentives or design steers that produce a better future in this sense, in the sense of what are the kinds of interventions, the kinds of regulation, the kinds of business model changes? What would you advocate for if the whole public could change it?

Pat Pataranutaporn: I was actually reflecting on this a little bit before coming onto the podcast today. What is our pathway to impact? And I think for me as a researcher, what we are really good at is trying to understand this thing at a deeper level and coming up with experiment and new design that can be alternative. I think that there's something sort of interesting about the way that historically, before you can attack the demon, you need to be able to name it.

Pattie Maes: Name it.

Pat Pataranutaporn: Right?

Daniel Barcay: Yeah.

Pat Pataranutaporn: I think it's similar with the AI. In order for us to tackle this wicked challenging thing, you need to have a precise name and terminology and understanding of what you're dealing with. And I think that's our role, I think as a researcher in academia, is to shed light on this and enhance our understanding of what's going on in the world, especially with AI.

There's something sort of interesting about the way that historically, before you can attack the demon, you need to be able to name it…In order for us to tackle this wicked challenging thing, you need to have a precise name and terminology and understanding of what you're dealing with. And I think that's our role, I think as a researcher in academia, is to shed light on this and enhance our understanding of what's going on in the world, especially with AI.

Daniel Barcay: Yeah. Well, here at CHT, we say that clarity creates agency. If you don't have the clarity, you can't act. And that's why I want to thank you both for helping us create the clarity, name the names, find the dynamics, do the research so that we know what's happening to us in real time before it's too late.

Pat Pataranutaporn: Yeah, I think I might want to say it a little bit about technologies of the future. What we hope is that this is not just our work. I hope that more researchers are jumping on to do this kind of work. So for people developing AI across academia industry, that they will start thinking bigger and broader and not just see themselves as someone who can just do the engineering part of the AI or doing the training part of the AI, but thinking what is the downstream impact that what they're doing is going to... How is it going to impact people so that maybe we will steer away from the conversation around like, "Well, It's inevitable." This thing is inevitable, but we need to be on the race to this. If more people have that sort of awareness and more people listen to you guys, like this podcast and follow the work of the Center for Humane Technology, I think we will have technologies that are more thoughtful.

Pattie Maes: Yeah, and AI should become a more interdisciplinary endeavor, I think. Not just, again, the engineers, the entrepreneurs, and maybe the government as well, but we should have historians and philosophers and sociologists and psychologists, et cetera. They have a lot of wisdom about all of this, and so I think it has to become a much broader conversation, not just educating the entrepreneurs and the engineers to be a little bit more mindful. Yeah.

Daniel Barcay: I agree with that 100%. And as this technology can meet us in such deeper ways than any technology in the past, as it can touch our psychology, as it can intermediate our relationships, as it can do things out in the world, we're going to need all of those other specialties to play a part.

Pat Pataranutaporn: I think it's really clear that this is a really, really hard question. It touch on so many aspects of human life, not just our... I think right now, what people focus on productivity with the AI, will help people work better. But I think even just the productivity alone or just the work area alone, it's also touched on the question of purpose. What does it mean to actually do something? It will change the way that we think about the purpose, our meaning in life and things like that.

So even just these domain alone is never just about work by itself. So that's why AI is a really hard question that require us to think in many dimensions and in many directions at the same time. And we don't necessarily have all the answer to these big question. But I think that the more that we can learn from other discipline, the more that we can learn from wisdom across culture, across different group of people, across expertise, the better we could start to comprehend this and have better clarity on the issue at hand.

Daniel Barcay: I'm so thrilled that you're doing this work. I'm so glad that you're in this world and that we get to work together and build on each other's insights. And thanks for coming on Your Undivided Attention.

Pattie Maes: Great to be here.

Pat Pataranutaporn: Thank you so much.

Pattie Maes: Thank you.

![[ Center for Humane Technology ]](https://substackcdn.com/image/fetch/$s_!zQw4!,w_80,h_80,c_fill,f_auto,q_auto:good,fl_progressive:steep,g_auto/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Feeb3c25f-26ad-4fcb-b5b4-aa265d0b8dcf_1063x1063.png)

![[ Center for Humane Technology ]](https://substackcdn.com/image/fetch/$s_!DF9J!,w_152,h_152,c_fill,f_auto,q_auto:good,fl_progressive:steep,g_auto/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Fd912797e-8577-49d2-8caa-961cb9c65a59_4595x2611.jpeg)

Share this post