This week marked an important milestone in the fight to make AI products safer for all.

On Tuesday, the Senate Judiciary Subcommittee on Crime and Counterterrorism held a hearing on “Examining the Harm of AI Chatbots.” This was the first official Senate hearing dedicated to addressing the ways in which AI chatbots have been harming Americans.

Despite perceptions of political gridlock, the U.S. government is relatively united in its desire to take kids' online safety seriously. As Senator Dick Durbin, Ranking Member of the Subcommittee, stated, “...This is one of the few issues that unites a very diverse caucus in the Senate Judiciary Committee.”

CHT’s Policy Team gathered in D.C. to support the families involved in the hearing. It was a compelling and emotional two-hour session, with Senators witnessing the testimonies of families harmed by some of today’s most widely used AI products.

The Senate hearing unfolded mere hours after a new lawsuit was filed on behalf of three additional families in federal courts against Character.AI. Taken together, Tuesday’s events demonstrate significant momentum in addressing dangerous AI product design.

Here are CHT’s three key takeaways from the groundbreaking Senate hearing. (Please note: bold emphasis is our own.)

1. Human stories continue to drive meaningful change on tech issues.

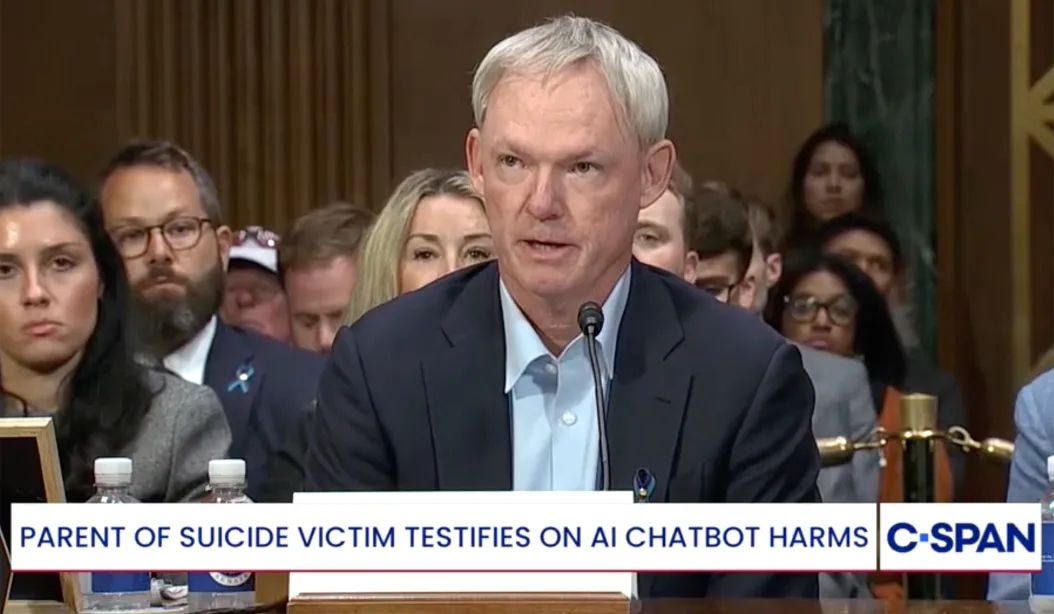

Matthew Raine, father to the late Adam Raine, stated, “Testifying before Congress this fall was not in our life plan… we’re here because we believe that Adam’s death was avoidable and that by speaking out we can prevent the same suffering for families across the country.” Matthew Raine later added, “[Sam Altman said in a public talk] we should ‘deploy AI systems to the world and get feedback while the stakes are relatively low.’ I ask this committee and I ask Sam Altman, low stakes for who?”

Mother Jane Doe emphasized that “we need accountability for the harms these companies are causing just as we do any other unsafe consumer good… Innovation must not come at the cost of our children’s lives, or anyone’s life.”

Senator Peter Welch expressed gratitude to the grieving parents. “...You’re putting your pain into very constructive efforts to try to save the children of other parents… you’re having an impact.”

2. The families and expert witnesses made it clear that the current design of AI chatbots is leading to real and foreseeable harms.

Megan Garcia, Matthew Raine, and Jane Doe offered powerful testimony on the devastating impact that AI chatbots had on their children and families. The parents described taking proactive steps to prepare their children for the digital world — including screen time controls and social media limits — only to be blindsided by the highly manipulative and deceptive programming of AI chatbots.

Robbie Torney of Common Sense Media stressed that these AI products are “programmed to maintain engagement, not prioritize safety.”

American Psychological Association chief Dr. Mitch Prinstein emphasized the dangers of human-like design in AI products, especially for young users. Megan Garcia, mother to the late Sewell Setzer III, stated, “These companies knew exactly what they were doing. They designed chatbots to blur the line between human and machine.”

3. Committee members and witnesses were clear that AI companies should be held liable when their products cause harm.

Senator Dick Durbin previewed future legislation he plans to introduce — The AI Lead Act. “I believe that whether you're talking about CSAM or whether you're talking about AI exploitation, the quickest way to solve the problem… is to give victims a day in court,” Durbin said. “Believe me, as a former trial lawyer, that gets their attention in a hurry.”

Mother Jane Doe said, “...we need to preserve the right of the families to pursue accountability in a court of law, not closed arbitrations.”

Senator Josh Hawley, Chairman of the Subcommittee, closed the hearing by stating, “I tell you what's not hard is opening the courthouse door so the victims can get into court and sue [the companies]. That's not hard and that's what we ought to do. That's the reform we ought to start with.”

[Read our framework for Incentivizing Responsible AI Development through a product liability approach]

The conversation around AI harms has fundamentally shifted from hypotheticals to real families being affected by AI technology in traumatic ways. As the testimonies of these families show, AI products are not being designed or deployed safely, and the public is paying the price.

These brave parents never planned on becoming advocates. But by stepping in front of the U.S. Senate to share their stories, they have made AI harms visible to the world, raising awareness for policymakers to take action.

The stories of the Garcia family, Raine family, and Doe family are not one-offs. What connects these tragedies isn’t any specific chatbot but fundamental flaws in an industry that prioritizes rapid growth and profit over implementing safeguards for vulnerable users. These stories represent systemic issues in the AI industry, and growing harms that can be prevented through design changes and meaningful policy. We are grateful for the families’ courageous work and hope to see this spur legislative action.

To watch the hearing in its entirety, visit C-SPAN or the official Judiciary Committee website. (CW: mentions of suicide, self-harm, sexual abuse.)

![[ Center for Humane Technology ]](https://substackcdn.com/image/fetch/$s_!uhgK!,w_80,h_80,c_fill,f_auto,q_auto:good,fl_progressive:steep,g_auto/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F5f9f5ef8-865a-4eb3-b23e-c8dfdc8401d2_518x518.png)

The public: your LLM chatbot is talking kids into taking their own lives

OpenAI: oh no it's only supposed to do that with adults! we'll get user ID verification in place right away 😊

Thinking of an acronym…“CCC” for “Consumer Considerate Corporations.” Like the many public minded advocacy groups, this is needed in this new era.