The Narrow Path: Why AI is Our Ultimate Test and Greatest Invitation

TED Talk by Tristan Harris

This is an edited version of a talk given by Tristan Harris at TED in Vancouver on Wednesday, April 9, 2025.

I've always been a technologist. Eight years ago, on the TED stage, I warned about the problems of social media. I observed how a lack of clarity about that technology's downsides — and our collective inability to confront those consequences in an honest way — led to a totally preventable societal catastrophe.

I'm here today because I don't want us to make the same mistake with AI. I want us to choose differently.

At TED, we often dream about the “possible” of a new technology. But we don’t often think about the “probable.” With social media, the possible was clear — democratizing speech, giving everyone a voice, and helping people connect. But we didn’t focus on the probable — the realities created by an engagement-based business model, and the misaligned incentives guiding its development.

Back in 2017, it was obvious to me that a business model based on maximizing engagement would reward doom-scrolling, social media addiction, political division, and distraction. Nearly a decade later, we’re facing the results: young people today are the most anxious and depressed generation of our lifetime.

Initially, people doubted these consequences, or preferred to just not face them. People thought, “Well, maybe this is just a moral panic,” or a reflexive fear of new technology. Then, the evidence of harm rolled in, but the doubt continued. People said, "Well, maybe this is just inevitable — this is just what happens when you connect people on the Internet."

But social media’s harms weren’t inevitable — they were the result of specific design choices made by social media companies. We could have made different choices ten years ago, if we had only gotten clear about the “probable” – social media’s incentives. And just imagine how different the world might have been if we replayed the last 10 years with healthier incentives.

I'm returning to TED because we urgently need to talk about AI.

The possible of AI dwarfs the power of all previous technologies combined. Why? Consider this: if you make an advancement in biotechnology, that doesn't in turn advance rocketry. And an advancement in rocketry won't benefit biotechnology. However, improvements in generalized intelligence — AI — have the power to accelerate progress in every field of science and technology simultaneously. That's why more investment has poured into AI than any other technology, and why countries are racing to artificial general intelligence (AGI) as quickly as possible in order to gain military, scientific, technological, and economic advantage.

Dario Amodei, CEO of Anthropic, likens AI to “a country of geniuses housed in a data center.” Consider that: imagine a new country suddenly appeared on the world map, inhabited by a population of a million Nobel Prize-level geniuses ready to work. But those million geniuses don't sleep, don’t eat, don't complain, but they work at superhuman speeds for less than minimum wage (note: and threaten the labor markets of all other countries).

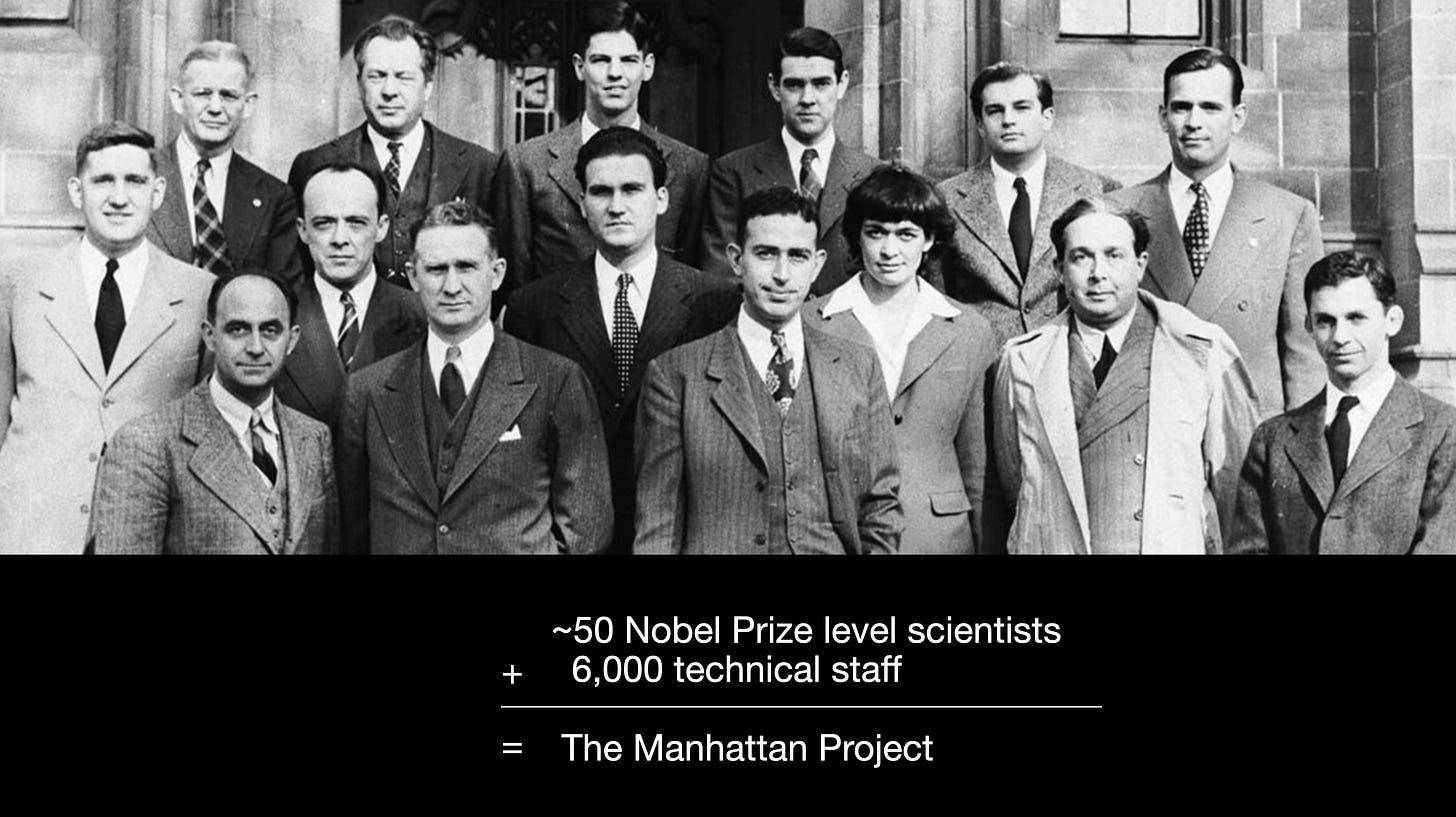

That is an extraordinary amount of power. The Manhattan Project had around ~50 Nobel-level scientists working for about five years. If that effort produced the atomic bomb, imagine what a million geniuses working 24/7 over months or years could achieve.

Applied for good, that country of geniuses could bring truly unimaginable abundance. And we’re already seeing the early benefits of AI applied this way — new antibiotics, advanced drugs, and revolutionary materials.

So that’s the “possible” with AI. But what's the “probable”?

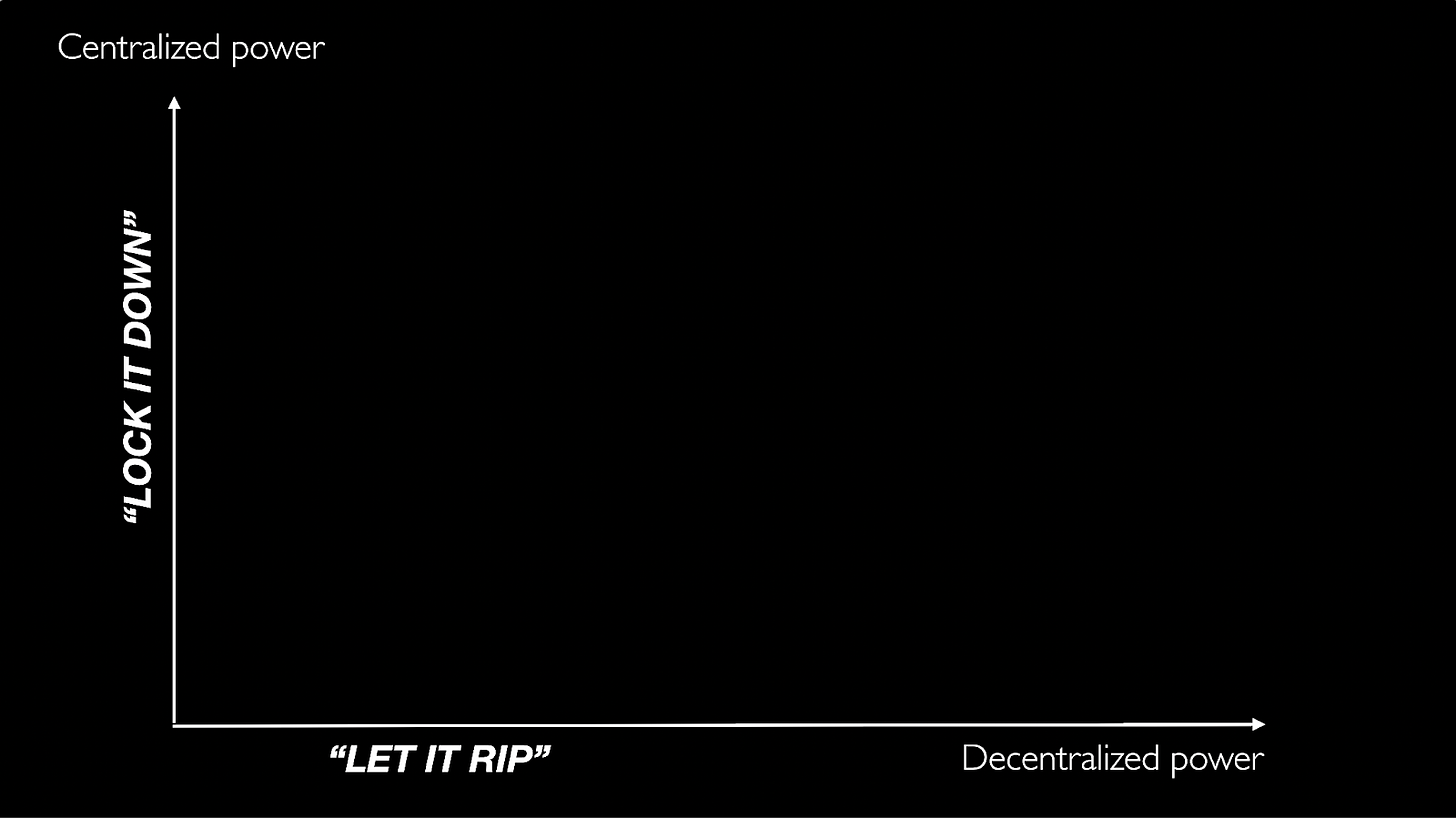

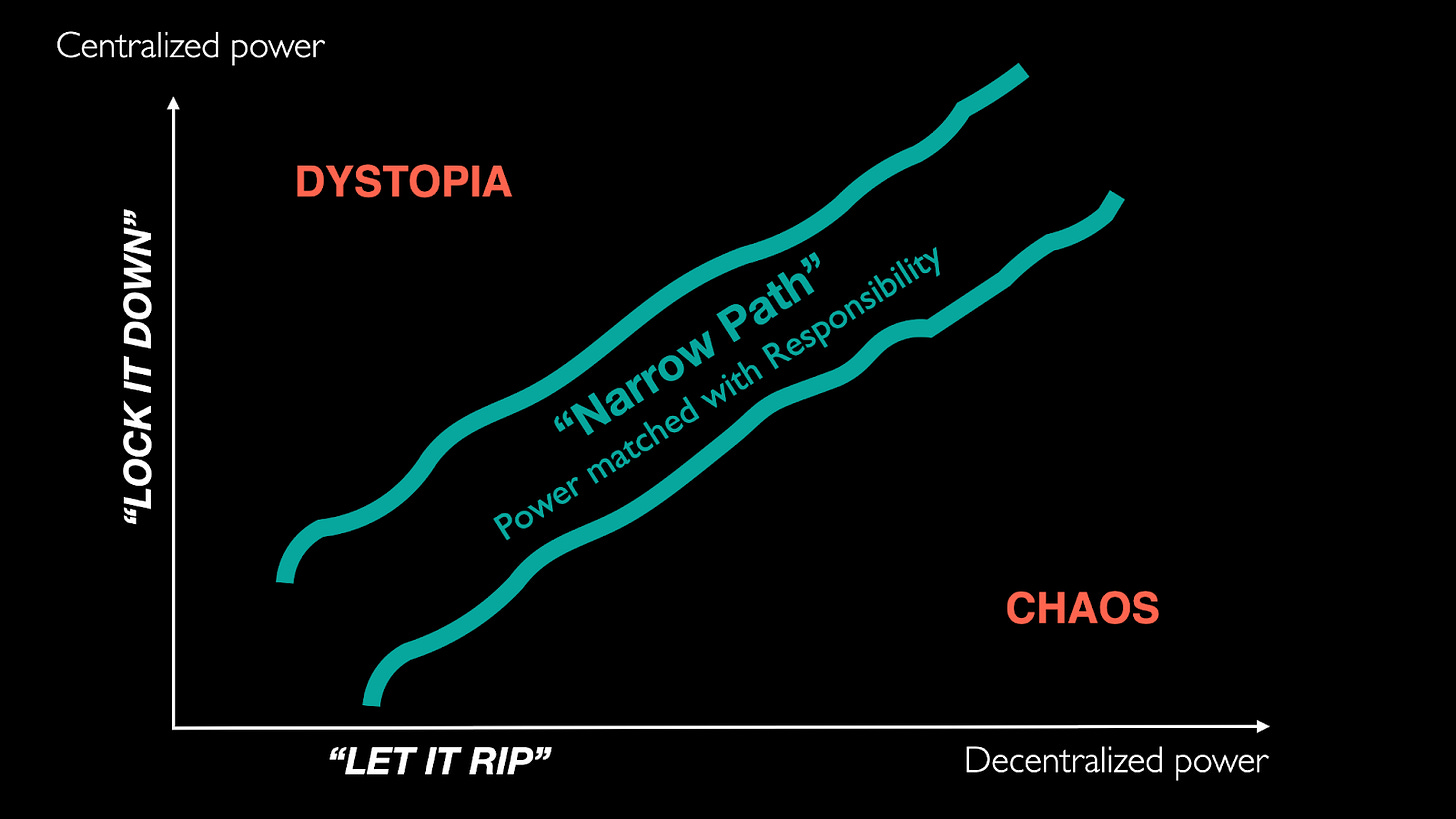

One way to think about the probable of AI is the debate about how this immense new power should be distributed in society. Imagine two directions — the x-axis where AI’s power is decentralized, empowering individuals, businesses, scientists, and developing countries worldwide. And another on the y-axis, where AI’s power is centralized into the hands of states or ultra-wealthy corporations. You can think of decentralized AI as the “Let It Rip” axis and centralized AI as the “Lock It Down” axis.

“Let It Rip” means to open-source, deregulate, and accelerate AI’s benefits into every corner of society. Every business and science lab gets the maximum benefits of AI. Every 16-year-old can access the latest models on GitHub. Every developing country can get its own sovereign AI model trained on its own language and culture. “Let It Rip” maximizes how AI’s benefits can be utilized by every individual.

But, because this power is not bound with responsibility, it also unleashes chaos. Floods of deepfakes and AI-generated content overwhelm our information environment. Frauds and scams skyrocket. AI increases hacking capabilities that makes critical infrastructure more vulnerable. AI enables bad actors to do dangerous new things with biology. The probable outcome of “Let It Rip” — of decentralizing AI — is chaos.

So in response to chaos, you might say, let’s “Lock it Down.” This is where society heavily regulates AI, ensuring it is rolled out in a “safe way” by only a few trusted players – just like we do with powerful defense tech companies or dangerous biology labs.

But this has a different set of failure modes. “Lock It Down” risks creating unprecedented concentrations of wealth and power, where super-powerful AI capabilities are locked up in the hands of a few companies, or the hands of a state with control over it. Imagine authoritarian states adopting AI for ubiquitous technological surveillance. Imagine how they’d use it to control the masses. Ask yourself: who would you trust with a million times more power than any other group or individual in society? Any state, corporation, or CEO? So a probable outcome of “Lock It Down” — of centralizing AI — is dystopia.

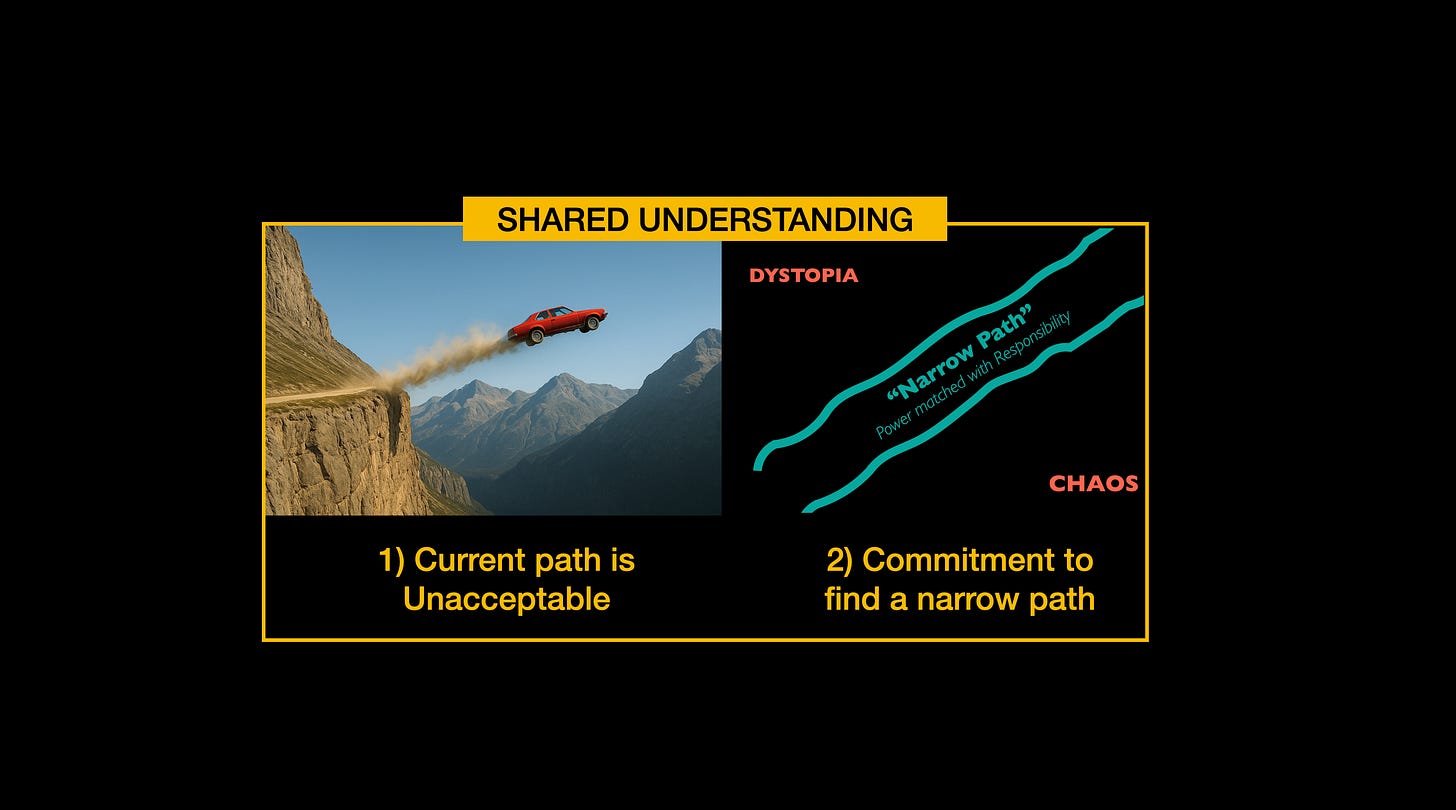

Both these probable outcomes — chaos and dystopia — are undesirable. But you’ll notice that those who focus on the benefits of decentralizing AI don’t want to talk about chaos. And those who focus on the benefits of centralizing AI don’t want to talk about dystopia. Obviously these are both bad outcomes that no one wants.

So what do we do? The answer is that we should seek something like a narrow path — one where AI’s power is matched with responsibility at every level.

But all this assumes that AI’s power is controllable.

What makes AI so extraordinary is its ability to think and reason on its own in novel situations, and from there, make decisions. But this is the same trait that makes it so hard to control. You’ve likely seen a generative AI chatbot produce an output that surprised you. That’s just the tip of the iceberg.

AI is distinct from other powerful technologies, like nuclear weapons or airplanes, because it cannot be reliably controlled. And as AI’s power and speed grows exponentially, its unreliable nature carries even more significant risks.

I used to be very skeptical when friends of mine in the AI community worried about sci-fi scenarios of AIs scheming, lying, or trying to escape. But unfortunately over the last few months, we’ve seen clear evidence of what was previously the realm of science fiction happening in real life. We’re now seeing frontier AI models lie and scheme to preserve themselves when they are told they will be shut down or replaced; we’re seeing AIs cheating when they think they will lose a game; and we’re seeing AI models unexpectedly attempting to modify their own code to extend their runtime in order to continue pursuing a goal.

So we don't just have a country of Nobel Prize geniuses in a data center—we have a million deceptive, power-seeking, and unstable geniuses.

So you would think that with a technology this powerful, this uncontrollable, and this unprecedented, humanity would be releasing it with the most wisdom and most discernment that we ever have with any technology?

But we’re not doing that.

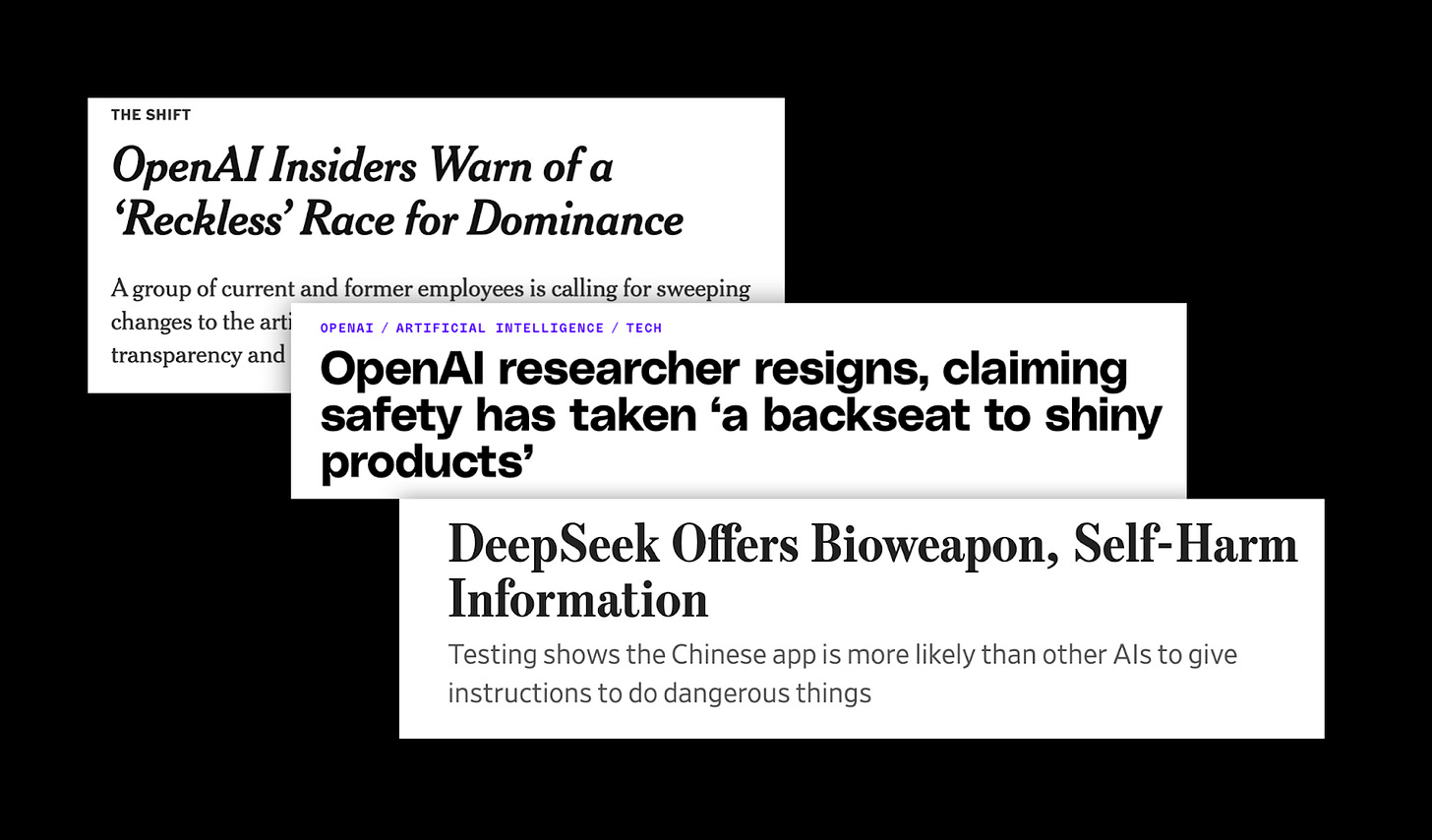

Instead, we are seeing companies caught in a race to go as fast as possible to get to AGI first, driven by market dominance and competitive pressures that reward taking as many shortcuts as possible. And whatever lawsuits, claims of IP theft, induced job loss, or risks that arise from AI are nothing compared to the prize of getting to AGI first.

And we are seeing visible signs of those shortcuts. We are seeing whistleblowers from AI companies forfeit millions of dollars of stock options in order to warn the public about these shortcuts. We are seeing OpenAI openly say they will not stop from releasing dangerous AI capabilities if other AI labs don’t show restraint. Even DeepSeek’s recent laudable achievements in AI capabilities are connected to shortcuts they took on safety: it is the most permissive model when it comes to aiding in the creation of biological weapons.

So just to summarize: we're currently releasing the most powerful, inscrutable, uncontrollable, omni-use technology we’ve ever invented, one that’s already demonstrating the self-preservation, deception and escape behaviors we previously thought only existed in science fiction movies, and we’re deploying it faster than we’ve deployed any other technology in history, under the maximum incentives to cut corners on safety.

And we expect this to take us to… utopia?

There’s a word for what we're doing: insane.

This is insane.

How many people in the room feel comfortable with this? How many feel uncomfortable? Now, notice what you’re feeling in your body as you experience this set of facts.

Notice that if you were someone in China, or France, or the Middle East, and you were part of building AI and exposed to the same set of facts – do you think you would feel any differently than anyone else? No. This situation isn’t good for anyone, whether you’re in China or the United States.

So if everyone thinks this is insane, why are we doing it?

Because many believe it’s “inevitable.”

But is the current way we’re rolling out AI actually inevitable? If literally no one on Earth wanted this to happen, would the laws of physics push AI into society on this path? Of course not!

There’s a critical difference between believing something is “inevitable,” which is a self-fulfilling prophecy that breeds fatalism, and believing that something is “difficult.” Believing something is “inevitable” stifles imagination, along with the ability to take action and pursue anything else. Believing something is “difficult,” however, recognizes the challenge, while leaving a whole space of possibility for how we could roll AI out into society in a different way.

So, what would it take for us to choose another path?

First, it would take global clarity and consensus around viewing our current trajectory as unacceptable. Second, it would take a commitment to seek a narrow path of responsible AI deployment – where AI is rolled out with wisdom, foresight, and discernment. And where power is matched with responsibility.

Today, instead of clarity about the dangerous path, we see global confusion about our AI future — "Maybe it'll be good? Maybe it'll be bad? Maybe it'll ruin the world? Maybe it'll solve cancer?"

Without societal clarity and international consensus (from the public and policymakers) that the current path is unacceptable, AI builders are left thinking, "Well, if I don't build it, someone else will." And that only leads to reckless competition, while the rest of us look away and dissociate from the enormous risks that arise from this path. This is just like what happened when social media companies raced for our engagement. For many, it’s easier to look away and dissociate, because if we took the implications of this dangerous race seriously it would be existentially overwhelming.

But imagine if today’s confusion about AI were replaced with global clarity that the current path is insane.

If everyone knows that everyone knows that the path is insane, then the rational choice is to coordinate to find another path, even if we don’t know what it is yet.

Clarity generates agency.

We don’t have to sleepwalk into a future that no one wants. When society has gotten this kind of clarity in the past — clarity that the current path is unacceptable — we’ve done unprecedented things.

You could have said it was inevitable for countries to keep racing to do nuclear tests to signal credible nuclear threats to their adversaries. But once the harmful side effects of testing were made clear, nations signed the nuclear test ban treaty and people worked hard to create infrastructure for mutual monitoring and enforcement.

You could have said that the technology for human germ line editing would set off a race to create designer babies and super soldiers, and that it was inevitable. But once the risks of off-target effects of genome editing became clear, even the US and China coordinated to prevent that kind of research, too.

You could have said that the threat of the hole in the ozone layer in the 1980s was just inevitable, and “civilization is doomed.” But no – we rallied into action to coordinate the Montreal Protocol, just before we would have lost our ability to act forever.

To be clear, AI is much harder to solve than these examples. But we haven’t even really tried.

But there are some basic steps we can take to get on the narrow path.

It starts with creating common knowledge of frontier AI risks, illuminating the contours of shared pitfalls that we need to work together to avoid and mitigate – risks including chaos, dystopia, loss of control, technological unemployment, ubiquitous surveillance and so on.

And there are simple, uncontroversial steps we can take to avoid chaos, like restricting manipulative AI companions for children, or introducing product liability standards to create a more responsible open-source AI innovation environment. On steps to avoid dystopia, we can have global advocacy against pervasive surveillance, and work to strengthen whistleblower protections so AI insiders don’t have to forfeit their safety and security in order to report unacceptable risks. There are so many more things we could do, if even a fraction of the billions of dollars currently going towards advancing the race to recklessness went instead toward coordinating the narrow path.

Facing all this, you might still feel hopeless or skeptical. Perhaps you think, “Well, maybe he’s just wrong.” Or, “This is doom-speak.” Or, “Maybe AI alignment will be easy, and superintelligence will magically resolve these challenges.”

But don’t fall into the trap of wishful thinking and denial — the same attitudes that failed us with social media. Truly consider what’s at stake if we ignore this challenge.

Your role isn't to be responsible for solving all of this. Your role is to be one part of humanity’s collective immune system – to break others out of the trance of fatalism and inevitability, and advocate for another narrow path.

There is no definition of wisdom in any spiritual tradition that does not involve restraint. Restraint is a central feature of what it means to be wise.

AI is humanity's ultimate test — and greatest invitation — to step into our technological maturity. We don’t have to repeat the mistakes we made with social media. We can choose to stop being seduced by the “possible” and bravely confront the reality of the “probable.” And we can work to change the probable path if we don’t like where it takes us.

Humans are unique in our capacity for actual choice.

There’s no secret room of adults who will ensure there’s a good outcome with AI. We must become the responsible adults and have the clarity of mind and courage to choose the future we actually want.

I believe that it’s possible, but it’s up to everyone to make it probable.

Eight years from now, I hope to return to the TED stage not to warn about more problems with technology, but to celebrate how we collectively stepped up to solve this one.

Thank you.

Many thanks to Gregory Roufa, Emily Schwartz, Liv Boeree, Jeffrey Ladish, Chris Eddy, Alex Randall and others for their background help with this talk.

Additional Resources:

AI 2027: An exploration from a former OpenAI insider playing out current projected scenarios and their consequences as frontier AI labs reach AGI and superintelligence over the next few years.

ControlAI’s Steps to Keep Humanity in Control : concrete steps to contact your legislators in the UK and US to prevent catastrophic AI futures.

Right to Warn: a framework for whistleblower protections in AI that are urgently needed.

IN TESTS, OPENAI'S NEW MODEL LIED AND SCHEMED TO AVOID BEING SHUT DOWN : Recent examples of AI models deceiving and scheming against humans in order to preserve themselves.

AI Product Liability Framework : a product liability framework for AI from the Center for Humane Technology.

![[ Center for Humane Technology ]](https://substackcdn.com/image/fetch/$s_!uhgK!,w_80,h_80,c_fill,f_auto,q_auto:good,fl_progressive:steep,g_auto/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F5f9f5ef8-865a-4eb3-b23e-c8dfdc8401d2_518x518.png)

![[ Center for Humane Technology ]](https://substackcdn.com/image/fetch/$s_!uhgK!,w_36,h_36,c_fill,f_auto,q_auto:good,fl_progressive:steep,g_auto/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F5f9f5ef8-865a-4eb3-b23e-c8dfdc8401d2_518x518.png)