OpenAI unveiled its new o3 model in late December, marking a key inflection point in AI technology. It’s not yet available to the general public as I’m writing this. However, early testing has shown large jumps in performance (around 1.5x of its predecessor, o1) across benchmarks in coding, logic, and problem-solving. This was true even on ARC-AGI, a benchmark designed to be particularly challenging for AI systems.

o3’s strong coding performance has proven better than many competitive human programmers in complex scenarios. This is a big deal because coding unlocks so much automation, accelerating AI advancement and shrinking the role of humans.

Understanding o3’s Capabilities

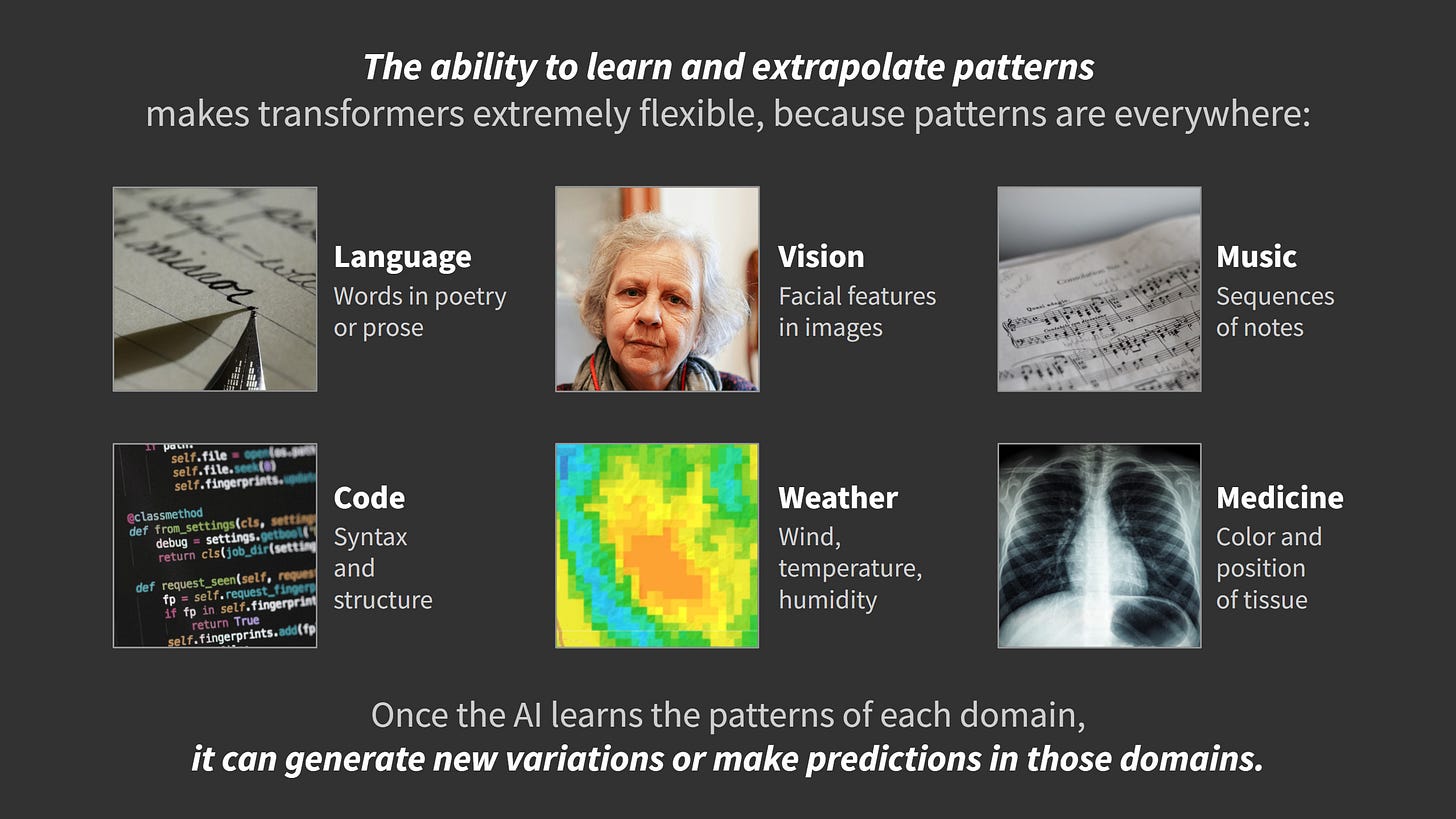

Transformers, the core architectures driving modern AI, are really good at learning and extrapolating patterns of all kinds, given enough neurons (flexibility to capture patterns) and training data (examples of patterns).

This makes transformers extremely flexible, because patterns are everywhere, including:

Language: Choices of words in poetry or prose

Vision: The location and appearance of facial features in images

Music: Sequences of beats and melodic notes

Code: Syntax and structure of computer code

Weather: Combinations of wind speed, temperature, and humidity

Medicine: Color and position of organs and abnormalities in medical imaging

Once the AI learns the patterns of each domain through its training process, it can then create new variations or make predictions based on those patterns.

o3 extends this formula into the realm of algorithmic patterns: it can learn and extrapolate sequences of reasoning steps (“chains of thought”) to achieve goals.

For example, if you ask o3 what would happen if a glass filled with water is pushed off a table onto a stone floor, it would go through a sequence of thoughts like this:

Gravity takes effect on the glass, moving the glass forward and down

The glass decelerates suddenly when it hits the floor

This shatters the brittle glass

The water, no longer contained, splashes out across the floor

There would be broken glass across the floor, along with a puddle of water

When training, o3 explores many different “chains of thought” solutions to problems. It’s deliberately given a very wide space to explore — so much so that many solutions are nonsensical. That doesn’t matter because each chain of thought is scored, including intermediate steps, based on how close it gets to the correct solution. The scoring is done by a reward model, typically trained on human ratings of similar problem-solution pairs. The best solutions rise to the top, and bad ones are discarded.

This method works very well in areas like coding or math, where there is a clear right answer that can be verified (by substituting values into equations or running code). In contrast, areas like creative writing or poetry are much harder to score objectively. So those benchmarks don’t change much with o3.

After exploring all these algorithmic patterns during training, o3 can then apply them at “inference time,” when a user asks o3 to solve a problem. The model can pick appropriate patterns for the problem it’s given, and try many solution variations. So o3 can usually get better results by thinking longer at inference time.

All this extra thinking does come at a cost, though. For example, on the difficult ARC-AGI benchmark, o3 used 172x more computation (plus associated energy and water) to increase its score from 76% to 88%.

What conclusions can we draw from all this?

Predictions in the AI space are always uncertain, but there are a few things I can say with reasonable confidence:

Models that learn by experimentation on their own (via “reinforcement learning”) are less sensitive to the quality and quantity of human data. The experimentation process exposes the model to many possibilities that would otherwise have to be found in human data and fed to the model.

o3 demonstrates how to efficiently train smaller reasoning models. You can use a big model and/or a lot of inference-time computation to explore a vast space of potential solutions (many of which will fail) and then cheaply train a smaller model on just the successful patterns.

Training and inference costs will come down dramatically. We’ve seen this consistently, driven by improvements in hardware and algorithmic optimization (including efficient training of smaller, cheaper models). For example, o3-mini outperforms the much larger o1 model.

Competing models will copy o3’s approach. The DeepSeek-R1 reasoning model from China came out with strong results shortly after o1 was announced, and many others will follow o3’s proof of concept.

Humans are being hired to write out new patterns to train on. In areas where AI models underperform, companies are hiring human experts—often in harsh working conditions and at low wages—to write “chains of thought” for complex tasks. Then, that information is fed to AI models to learn and extrapolate from. (And then those human contributions become much less valuable.)

o3-style models will open up a lot of performance headroom for 2025. It’s a new type of scaling that promises to be considerably more efficient than just scaling up neural network size and data, which has already run into significant challenges. As expected, 2025 promises to be a critical inflection point.

Suggested reading

Jack Clark’s breakdown of OpenAI’s o3 model is an excellent read on this topic.

The ARC-AGI blog has a detailed technical writeup on o3’s high score.

![[ Center for Humane Technology ]](https://substackcdn.com/image/fetch/$s_!zQw4!,w_80,h_80,c_fill,f_auto,q_auto:good,fl_progressive:steep,g_auto/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Feeb3c25f-26ad-4fcb-b5b4-aa265d0b8dcf_1063x1063.png)

![[ Center for Humane Technology ]](https://substackcdn.com/image/fetch/$s_!zQw4!,w_36,h_36,c_fill,f_auto,q_auto:good,fl_progressive:steep,g_auto/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Feeb3c25f-26ad-4fcb-b5b4-aa265d0b8dcf_1063x1063.png)